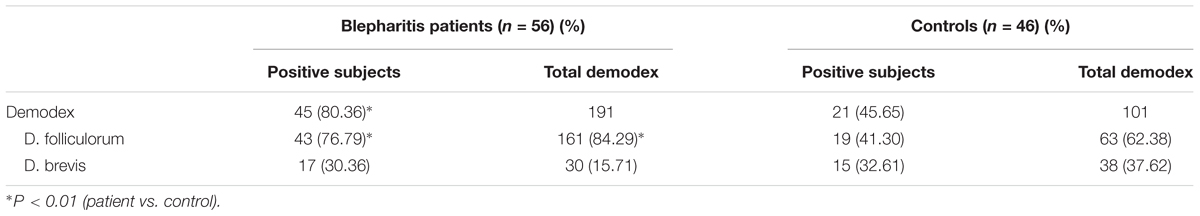

Let’s get a bird’s eye view of the archive layout: Each collection's last slice is a special "EOF" slice. In the body, we interleave slices of data from the collections. The prelude records the number of collections backed up, and other metadata. The new mongodump archive consists of two sections: the prelude and the body. To do that, while preserving the throughput-maximizing properties of concurrent reads, we leveraged some Golang constructs - including reflection and channels - to safely permit multiple goroutines to concurrently feed data into the archive.

Our new version was designed to support these use cases. However, since the old mongodump wrote each collection to a separate file, it did not work for two very common use cases for database backup utilities: 1) streaming the backup over a network, and 2) streaming the backup directly into another instance as part of a load operation. Thus, a previous version of mongodump concurrently read data across collections, to achieve maximum throughput. To prevent stalls from reducing overall throughput, you can enqueue reads from multiple collections at once. When reading from a collection, requests are often preempted, when other processes obtain a write lock in that collection.

In MongoDB, data is organized into collections of documents.

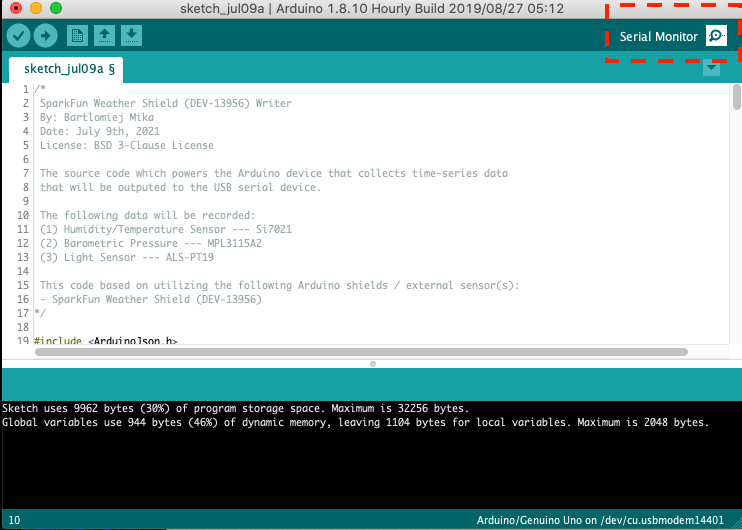

#Golang goserial serial#

The Go language is great for concurrency, but when you have to do work that is naturally serial, must you forgo those benefits? We faced this question while rewriting our database backup utility, mongodump, and utilized a “divide-and-multiplex” method to marry a high-throughput concurrent workload with a serial output.

0 kommentar(er)

0 kommentar(er)